A key part of Agile development is the retrospective process. A good way to uncover your current feedback cycles and find a starting point is to hold an automation-focused retrospective. Gather the team and brainstorm about what currently works well, what things you would like to do differently, and areas where you want to increase your level of automation. From this meeting, generate a ranked backlog of stories related to automation that you would like to address. Then, mix in items from this list with the product backlog to balance advancing the product along with advancing the internal infrastructure.

If the business or your Product Owner is skeptical about the value of automation, add in a few small stories with the biggest bang and be sure to demonstrate their value during the iteration demos. Finally, as a last resort, if the business or your Product Owner is completely unwilling to consider investing time in the infrastructure necessary to deliver faster with higher quality, the team can work on areas of automation as a flat tax applied to the estimate of each story in the product backlog. This isn't a healthy dynamic, but as you generate small wins it will help you reinforce the benefits of the improvements in quality and speed you're generating.

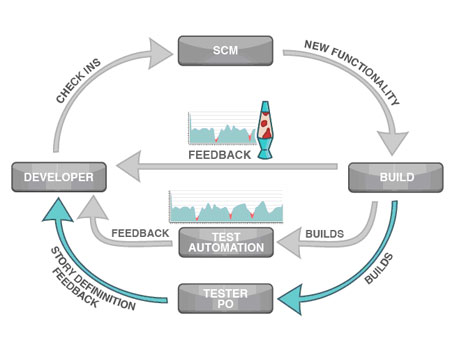

As a reminder of the feedback cycles and Lean thinking covered in Part 1 of this article, below is a picture of the development cycle. In this development cycle, all the batching and queuing has been removed, and quality feedback is being generated at the code level, at the automated functional testing level, and at the manual regression level.

Unit Tests amp; Code Metrics

Unit tests and code metrics are a good place to begin our example. By implementing continuous integration (where the code is built and the unit tests run on a dedicated, non-developer workstation), you are most of the way toward having feedback loops at the code level. This level of automation starts by finding a dedicated machine to execute the build. How many times have you successfully compiled your code, checked it in and later discovered that the code in the repository wouldn't compile for anyone else because you forgot to add a file? By having a non-development workstation for builds, you will get feedback about the quality of the code in the repository, not the quality of the code on your machine.

Next, you will want to focus on scripting the build process. If you are developing in Java, you're probably familiar with Apache's Ant project. Ant [http://ant.apache.org/] and its .Net derivative NAnt [http://nant.sourceforge.net/] use an XML-based declarative language to describe, and ultimately execute, the build process. Ant isn't only for Java projects; I once used Ant to drive the build process of a project that was written in five different languages on two different platforms. Some people still prefer to use make or shell scripts to drive a build, but there are many higher-level tools that are just as powerful, and whose scripts are much easier to maintain.

Ultimately what you want is one thing to execute that will kick off your build and produce all the necessary build artifacts. Maven [http://maven.apache.org/] is a build environment that is sophisticated enough to manage all the project dependencies, manage the build process, and create a project home page complete with documentation. Maven is a powerful tool, but it does impose its conventions on your build structure and process. If you can accept those conventions, it can be a very powerful tool in your automation infrastructure. Whatever build tool you pick, make sure your build infrastructure also runs your unit tests. The results of running the unit tests will provide a wealth of quality information at the code level.

Once you can make a build in one step, then you can move onto continuous integration. According to Martin Fowler, continuous integration [http://www.martinfowler.com] is the practice of frequent integrations of new work, where each integration is automatically verified with a build that runs unit tests. There are many continuous integration tools available.

The most popular open source tool specifically built for continuous integration is CruiseControl [http://cruisecontrol.sourceforge.net/]. But continuous integration isn't all you will want to accomplish, so you may want to widen your tool search as needed. Typically you will want to create a build for each integration, but you will eventually want to create a nightly, deployable build. As you introduce functional automation testing, you may have several variants of builds that execute different levels of automation. The open source version of CruiseControl wasn't designed for this.

I prefer Hudson [http://hudson-ci.org/] for multi-project build orchestration. With Hudson, you will want to configure a new job and schedule it to look for changes to your source code repository and build for each atomic check-in. Then create another job and schedule it to run nightly to produce your nightly build. Running a build for each atomic check-in is important because it allows the team to understand the specific check-in that negatively impacted the build or degraded quality. This is the most effective feedback loop you can create. When you can tie quality metrics with each check-in, you will have constant feedback about the state of your code. This has the side effect that when developers go to the source control system to check-out the latest code, they will have explicit knowledge of the build and test quality of the code at each revision point; no more getting code and spending minutes or hours finding out if it will build.

Once you have regular builds that are emitting quality information, you can use tools like Sonar [http://sonar.codehaus.org/] to graphically understand how your code-level quality is trending. Sonar can display information like the number of passing unit tests for each build, or build time, or percent of passing continuous integration builds. There are other tools like static analyzers and lint tools that will generate objective data about code-level quality. Some good Java analyzers are Findbugs [http://findbugs.sourceforge.net/], and PMD [http://pmd.sourceforge.net/]

Automated Functional Tests

Once you have code level automation, you can move on to automated functional testing. There are several levels of infrastructure you will need to build before you focus on the actual automation tests. First, you will need a dedicated machine for testing that will receive the automated deployments or installs. Next, you will need an automated way to take the build artifacts from your scripted build environment and package them up for deployment or installation. This process tends to be very specific to how you deploy or install your code. Like with the build process, the goal is to have one thing to execute that will deploy or install your build artifacts onto a separate testing machine. Tools like Cargo [http://cargo.codehaus.org/], and Capistrano [http://capistranorb.com] are useful for automating the package and deploy process.

A critical part of packaging is having an architecture where environment-specific configuration details aren't part of the build artifacts. This is a critical control point for quality; you want the same object code that was thoroughly tested in a test environment to move seamlessly to the production environment. Generally this is achieved by having environment-specific configuration files that are separate from the build artifacts. The configuration files are part of the environment and are parsed at runtime to initialize environment-specific configuration variables.

If your project uses a database, the next thing you will need to consider is how to automate your schema changes and database migrations. First, consider including the schema definition in the source code repository to keep the database definition in sync with the code that relies on that definition. The next thing to consider is aligning the granularity of the schema definition with the migration points. The goal is to be able to start with any schema version and go to some other version of the schema and migrate any existing data in the process. Obviously, this should work to go to forward, but it should also work backwards to allow for easy reverting of schema changes should they be necessary. Even if you aren't using Ruby on Rails, you should understand their migration conventions and consider how those concepts might fit into your project.

By automating the deployment and migration phases or the installation phase, the team will get instant feedback when a code-level change breaks anything related to deployment or install. Without this level of automation, typically deployment issues are found very late in the process as final code moves into staging or even production environment. I know many people in configuration management who have the ugly job of tracking down code-level changes that broke the applications ability to deploy or install. Finding these issues late in the process is terribly inefficient.

Manual GUI and Regression Testing

Now that you have all the necessary infrastructure to automatically deploy or install into arbitrary environments in a way that accounts for migrating data to new schemas, you can focus on automated functional testing. This is a deep subject area with many alternatives. There are many commercial tools for automated testing and a few good open source tools. If you are developing a web-based application, Selenium [http://selenium.openqa.org/] is a powerful tool especially when driven with RSpec [http://rspec.info/].

The final piece of the puzzle is the ability for any one on the team to deploy or install any build into any environment in a fully automated way. This gives the team the control to pull ready work, which is a key aspect of Lean's pull-based scheduling. First, you will need a repository of build artifacts. This can be as simple as using the file server, or can be as feature-rich as using a directory or web-based repository. The goal is to make every build available and provide the user interface to allow for any installer to be downloaded or any build to be deployed into specific environments.

I would encourage you to find one thing you want to improve in your automation infrastructure and bring it to your next retrospective or iteration planning meeting. Try to establish a cadence where the team can address automation work within the iteration. As you focus on reasonable levels of automation, you will be able to improve your quality by having the feedback loops to understand what check-ins are negatively affecting quality, and go faster by fixing those issues while the team is still in context.

About the Author: As VP of Products for Rally Software, Zach Nies brings close to 20 years of engineering and product development experience to Rally's Agile lifecycle management solutions. Prior to joining Rally, Zach served as Principal Architect and Director of Systems Architecture for Level 3 Communications and founded a small start up which was quickly acquired by the publicly traded Creo, Inc, now a division of Kodak. He also served as Chief Software Architect at Quark, where he provided the overarching technological vision for the company. Zach's product vision has won numerous industry awards, including Jolt Product Excellence awards, Seybold HotPicks and the prized MacWorld Best of Show. Zach has served on standards bodies such as the W3C's HTML working group and currently serves on the board of directors for Agile Denver. At the age of 13, Zach began commercially publishing software and, at age 16, started a successful consulting business. A Boettcher Scholar, Zach received his BS with distinction in Computer Science Engineering from the University of Colorado at Boulder. He spends his spare time tuning his golf swing and spending time with his family.