A perennial question in the configuration management field is how to control databases. Databases are too big and too complex to be managed as simple objects. They have a very expressive language: SQL. This is used to describe their structure, content, and changes. A newer issue is how to do enterprise CM. Although some tool vendors have attempted to provide support for large-scale systems, full support for complex enterprise systems is still lacking.

CM is important when either the structure or the content of a critical database is being managed. It is essential for the growing number of business systems driven by metadata. Despite this need, there have been no general solutions available. Commercial vendors have not taken the obvious step of providing generalized configuration and/or change management support, and CM solutions for databases have focused on the structure only, treating content and other attributes, including triggers and stored procedures, as an installation problem rather than a development problem.

A newer issue is how to do enterpriseCM. Some CM tool vendors have attempted to provide support for large-scale systems (e.g., component based development). Vendors have been attempting to support geographically distributed development for some time. Full support for complex enterprise systems is still lacking, however. Supporting complex systems, like supporting complex organizations, is a hard problem.

A recent project needed both functions. A multi-technology system demanded tracking of changes from requirements through retirement. The system included Cobol, Java, and .NET components, but the key component was a metadata tool for application development. The metadata tool was built on an Oracle database, where business logic and business variables were defined and maintained. Software development in this environment centered around changes to the database made with the metadata tool, and involved managing change across the full set of different technologies, across different organizations, in offices that were widely separated. In short, we needed to provide both enterprise CM and database CM.

We realized that we were facing two problems with one solution: the diversity of the enterprise forced us to abandon the traditional “software release” approach to CM in favor of a higher level, more abstract solution. Taking an abstract view let us redesign the development, test, and deploy work flow so that we could manage source code and database content updates interchangeably. We call this technique Longacre Deployment Management™ (LDM), and use it to track the progression of all types of intellectual property (IP)—program logic, hardware and software configuration, database structure or content through a single, abstract life cycle.

We believe that LDM is essential to successfully implementing CM in a database-heavy enterprise, and that it provides significant advantages over other software release–based techniques where divergent technologies are involved. The novelty of the LDM technique inspired the writing of this case study, along with an accompanying article describing the technical details of implementing LDM. We have endeavored to separate the two. This case study presents the situation faced by our client, and the solutions we devised. The details of LDM provided here are only those relevant to discussing the CM system we implemented. Likewise, the client details in the LDM article are confined to those relevant to providing implementation hints for LDM.

Background

We'll call our client “Really Big Financial,” (or RBF) and hope they don't sue us. RBF is an American company providing insurance to a niche market. That niche has experienced sustained, profitable growth. As usual, market growth means buyouts and mergers. RBF has development and production facilities spread across North America and it suffers from the usual confusion caused by trying to combine separate organizations.

RBF does software development as an IT function, but not as an R&D function: the primary purpose of software development is to support its own business. Their software customers are other RBF divisions or business partners. There is a smorgasbord of different technologies at play: there are COBOL applications doing back office functions and supporting thin-client systems. There are Java and .NET middle-tier and thick-client applications. There is also a new, but substantial metadata-based application development environment supporting a web interface.

RBF's legacy software systems continue to be supported, but many of those systems are being discarded as the insurance productsthat they support reach end-of-life. Going forward, all RBF insurance policy- writing applications are being implemented using either .NET (few) or the metadata application development system (MDS). The legacy applications include several software services.

These services remain viable, so RBF is encapsulating them as web services rather than rewrite them. While Service-Oriented Architectureis not the guiding philosophy at RBF, the principles of principles of SOA are providing value.

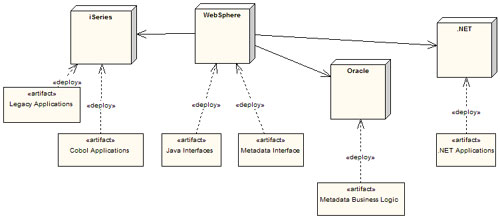

.

Illustration 1: Technologies on production servers at RBF

MDS is a software development environment built atop a database. It is similar to the form and query construction tool provided with Microsoft Access™. The database is used as both a storage environment and a target platform: “code” built in the proprietary GUI is translated into table data or SQL stored procedures. The metadata defines the variables on which logic will operate the user interface forms and transitions, and all of the internal logic of the application, including edits for data entering the system and transformations for data delivered externally for disposition and processing.

Metadata is deployed from one database to another (for example, from the development database to a testing database) by exporting it as SQL statements. The amount of metadata updated during development of a system change, including data element definitions and the logic that operates on them to address business requirements, can range in scope from a single generated SQL statement to tens of thousands of statements, with few natural boundaries. While developers generally have some idea of the likely impact of a change, there are still occasional surprises that result in hundreds (or thousands) of statements. This is one of the side effects of working in an environment that mixes human- and computer-generated code.

Because the content of the metadata database is source code, it must be managed just like any other source code project. Because the metadata exists only in a database, the CM challenge is immediate: existing approaches don't support databases, but RBF has staked their future on a development system that is all database.

Development using the MDS is against a single shared development database. This is a basic implementation of the Shared Workspace pattern, with changes made by one developer immediately visible to others in the development environment. The MDS deployment tool includes logic to serialize changes that are exported together. Detecting and understanding interdependencies among different work items by different developers, sometimes for different insurance products, was and is a source of deployment problems.

An added challenge of the metadata system was the high degree of structure in the development process. Unlike conventional 3GLsystems where an ad-hoc test framework can be built for any module, the metadata implementation requires that applications be built from their core functions outward. The MDS vendor made this into a strength, as the highly structured nature of the application and the interpreted, integrated development environment means that application code once developed can easily be reused, or turned into templates.

The gradual state-by-state roll-out of RBF's products has been a snowball of accumulating functionality, with each state delivering a valuable product as well as making improvements to the core code that forms the basis for subsequent products. This has enabled RBF to achieve high rates of development throughput: new product development has gone from months per product to weeks per product.

After a time, RBF's CIO was dealing with different camps, claiming that the MDS project could or could not work, would or would not be useful, etc. Eventually, the CIO wished a pox on all their houses, and brought in an outside specialist. Ray Mellott started in IT in 1967. He has worked as a programmer, analyst, infrastructure person, first and second line manager, and CIO (three times). He has built a data center in a parking garage, built organizations from scratch, and fixed problems others lost jobs over.

When Ray arrived, the MDS vendor was both developing the system and using the system to develop products. Deliveries were late, and there was tension between RBF and the vendor. The distributed nature of the enterprise, the transition from external to internal development, and the difficulties working with the MDS vendor simultaneously as system provider and development shop had produced a finger-pointing, doubting culture that had to be fixed if the project was to see any kind of success.

Ray's task was to make the MDS products succeed. Success in this case would mean:

· Demonstrating to the internal audience that the system would work, reliably and at the target transaction volumes.

· Building a team capable of mastering the MDS, and capable of working together in a multi-technology, geographically distributed environment against increasingly ambitious schedules.

· Bringing the system development and system management functions in-house.

· Discovering and addressing the hidden issues resulting from the technology.

· Transforming the development process from the R and style, single technology approach used by the vendor to a production engine capable of supporting RBF's business strategies, including a continually accelerating pace of product development.

Ray's personal measure of success was to eliminate his own job: either the system would be eliminated for cause, or the system would function in all respects.

Ray's style of management was described by one of the team as: “He asks you to do something totally ridiculous, and keeps asking why you can't do it and ignoring your answers, until it's easier to just do it than keep arguing about it.” Becoming a team, building products to schedule, and using a tool that nobody was sure of all seemed totally ridiculous, but it became easier to do them than to explain why they couldn't be done.

By spring of 2006 the new RBF team was functioning and products were being delivered, but costs were high. Additionally, the development cycle was filled with errors and problems, most of which were human in nature. Ray knew, and demonstrated to senior staff, that the team was experiencing configuration management and change control problems. The mostly-manual process received from the MDS vendor worked in a single-technology, low-volume scenario. But as the team grew and the system was integrated with other production systems, RBF needed new approaches to CM and change management. Because of the metadata component, neither the RBF team nor the majority of the CM community were familiar with the needs of the team.

When software development was handled by the MDS vendor, these problems were hidden from RBF. (Actually, they appeared as schedule delays, blown deliveries, and the like.) As the pace of development accelerated, and as product development was handed off from the MDS vendor to the RBF team, these issues were exposed as CM problems instead of mysterious bugs and schedule delays. CM had to change from a series of interesting problems handled by people with intimate knowledge of system nuances, to a set of procedures that avoided or prevented problems. The team needed a CM guru. Ray found his way to the CM Crossroads website. To quote his original post: “Most builds have any number of items; and a complete build can consist of hundreds of uniquely identified items, all manually identified, and all manually prepared. The potential for human error is enormous, and we are realizing this potential all too often.”

Austin Hastings has more than 20 years of experience in the software industry. He has been a developer, system administrator, CM weenie, and consultant. Currently he serves as principal consultant for Longacre, a software CM and process automation consultancy. Austin got involved as a result of Ray's call for advice on CM Crossroads. It became clear in discussion with Ray that management of the databases underlying the MDS would be the key to any solution. After some discussion of the nature and challenges of the project, Ray gave Austin a simple mandate, “Help us fix it.” That meant:

· Helping the team understand in depth the scope, requirements, and benefits of CM.

· Causing the team to embrace some process automation (low hanging fruit) that could reduce effort and improve quality outside of the core CM domain.

· Assisting in the selection of a software CM tool vendor.

· Identifying obvious candidates for process automation enabled by the CM tool.

· Leading the roll-out of the new tool, and demonstrating to the internal audience (again) that the system works, and can be adopted by the IT community at large.

· Implementing changes enabled by the above, while improving development schedules.

That mandate has been satisfied as of this writing. While some tasks have been handed off to RBF staff for completion, the team has grabbed the low-hanging fruit, found and implemented an integrated CM solution, automated much of the CM activity, and is enjoying the benefits in reduced human error and improved productivity.

The Problems

The project had to overcome a multitude of problems. Most of those problems were not CM related. Ray can discuss those in a different forum. Four problems dealt directly with CM: manual processes, an over-simple CM approach, the broad mix of technology at RBF, and the unusual nature of the metadata system (i.e., database as source code).

Problem: Manual Processes

The build and deployment processes established for RBF by the MDS vendor were almost entirely manual, were completely dependent on developers to accurately record and communicate the activities conducted, and required intimate knowledge of the internal workings of the system. Changes made in the MDS were tracked using a built-in tracking system in the MDS that had to be correlated with the team's own tracking system. Changes to Java source code and forms templates were controlled using CVS. Java code was built privately by developers, with no record of the source of the build. The resulting binary files were delivered to a shared drive, where the deployment team had to choose the correct files based on their own instincts or on the contents of emails from development or QA.

When QA requested deployment of a set of changes to a test server, the deployment group inspected the change list to understand its contents and decide the deployment order of the changes. The team then grouped the changes together by impact or content and manually executed procedures and scripts to produce deployable artifacts. These were then copied to the right locations and executed or installed. Nearly all build and deployment operations required remotely connecting to different machines via SSH, RDP, or VNC connections.

Not surprisingly, a single misstep could result in significant down-time. During Austin's initial visit (May ’06), half the deployment team plus a few members of the QA group were trying to diagnose a failed deployment. Understanding the problem and devising a solution occupied a group of three to six people for three days. At one point, the team was poring through a user's Microsoft Outlook mailbox trying to trace the installation history of certain features.

Problem: Overly Simplistic CM and Change Control

As a new organization, the RBF software team had little native concept of the principles of configuration management or how CM tools could be used for benefit. The team had no overall idea what was needed for good CM, and no clear grasp of the value and importance of good CM. They were using a commercial change tracking system (PVCS Tracker) configured and maintained by the MDS vendor. Like most developers, they had some basic familiarity with version control tools and concepts. Generally, that familiarity started with check out and ended with check in.

The Tracker setup inherited from the vendor was configured for the vendor, not RBF. Because the system wasn't a good fit for RBF, more and more of the system was abandoned or ignored as time went by. When we eventually migrated to a new system, more than half of the Tracker fields were unused, irrelevant, or filled with inconsistent data.

Probably the subtlest problem was the R and D style development philosophy built in to the system. The MDS vendor, of course, is an R and D company. They do software development, the software goes through a release cycle, and all the usual characteristics of COTS software development apply: bug fixes, release planning, feature development, etc.

That doesn't apply to RBF's internal systems: they have some concrete schedule constraints (legal commitments regulating the effective dates of changes to their products) but they can make other system improvements on a day-by-day basis. This difference means that the idea of different releases is not a coarse-grained, discrete partition of the growth of the system, but rather a fuzzy, permeable notion that guides system development but does not restrict it. This is a subtle difference, much like the difference in accounting between using a calendar year and using a fiscal year ending in March. That subtle difference had a cost, though, which was expressed in unused fields and wasted effort.

Another problem with the CM setup at RBF was a bias towards version control, a common failing of shops with limited CM experience. The team knew that CM was important, but lacked a clear vision of the requirements and benefits. The cultural bias of RBF's IT staff was to implement minimum coverage CM (an interesting trait for people selling insurance. The MDS team used Tracker, the COBOL group used MKS Implementer) another COTS product focused on facilitating deployments, while the Java and distributed teams opted for CVS to handle basic library management. Having once done CM by cloning the vendor's setup, the general IT audience didn't understand that their requirements had grown well beyond what might work in a technology stovepipe.

The next problem was in the tracking of change requests. The system provided by the MDS vendor used Tracker to manage change requests, and then used the change-tracking functions built in to the MDS to identify individual work items. The connection between the two tools was entirely manual. Developers were assigned a change order in Tracker, entered redundant data in the MDS tracking system, made the appropriate metadata changes, then manually transferred update information back to Tracker to close development of the issue.

Because the RBF system uses multiple different technologies, the team could not (and still cannot) use the change-tracking functions of the MDS independently. Official change tracking has to take place in a single system common to all the different technologies. The team was forced to use a simple Tracker life cycle to manage both change request tracking and software deployment.

With a database at the heart of the system, there was no good, general-purpose way to undo arbitrary changes. Since work items could not be rolled back after they were applied, there was no way to fail a change and back it out. This meant that requests in the system were one-way only: once a change was marked as ready for testing, there was no way to add to the change and re-deploy it. Instead, the failure of a change caused a new change request to be (manually) opened. The complete record of a change was frequently spread across several requests, with each request containing a partial delivery or an incompletely successful attempt to resolve the issue. To make matters worse, the system depended on humans manually recording the relationship of one change item to another and the interrelationships of different work packages and issues. The individual issues were pieces of the overall history of the change, with no explicit connection linking them together. Individual QA and development staff may have been briefly aware of all the various parts, but any post facto investigation of the change was doomed to failure.

In summary, the in-place CM system as a whole, while simple, did not provide enough returned value for the work invested. Although versions of files were identified, and individual work deliveries were tracked, there was no support for higher-level activity. The ‘bigger picture’ items, including delivery of changes to production systems, aggregate information about the work performed for a single change item, impact and traceability of upstream changes, and interrelations and dependencies among work items and/or changes, were simply not available. Moreover, the absolute dependence on human beings to reliably record perfectly accurate information in the work records meant errors were inevitable and frequent.

Problem: Broad Technology Mix

There are several different technologies used in the RBF product architecture. All of them, aside from the MDS, have well-understood software CM solutions. Unfortunately, those solutions don't share much in common. Deploying COBOL and deploying Java are different operations. Some of the technologies used at RBF are rare, including several different automated forms generation tools. The forms development processes generally look like C-language software development, slightly different from everything else in the system.

At one point, Ray was asked to deliver a briefing to senior staff addressing the question of why it takes so long with this system. One part of the answer was that to deliver a new product requires the scheduled, integrated delivery of IP in nine different technology domains.

Problem: Stand-Alone Metadata

In a traditional system most of the business logic, and the variables on which the logic operates, is encapsulated in libraries or programs. When a change is required the relevant modules are checked out of a library tracked by a CM tool, changes are made and tested, and the updated code is checked in. Deployment then involves pulling the changed module and applying technology-dependent processes to make the changed module available to its users. This is not the case with the MDS, and to understand the situation requires some background.

The MDS requires a database, and MDS products are built and maintained completely in a database context. Changes are applied with transactions, and those transactions are reified in the system. Transactions applied by a user are grouped together in sessions, and sessions are associated with change tracking entries, called SCRs, in an internal change tracking system. While session and transaction identifiers are accessible, deploying by SCR is the granularity that makes sense.

Metadata stores simple values, such as the X and Y coordinates of a widget on a screen. It also stores business logic like the formulas used to compute insurance rates for various categories of risk. The MDS provides an interface for all of this. The MDS does not store plain old data. When an insurance policy is written, the fields are filled with data. That data is plain data, written to tables on a database server. The interface and computations are controlled by metadata. We are focusing here only on the metadata.

Because of the innate sensitivity of database content to the order of execution of statements, the commands associated with a change can only be executed against a particular database one time. The metadata changes are accumulative, so data can be added or modified, even removed, but individual SQL statements cannot be undone. Once metadata changes are delivered, defects in the new configuration are addressed by delivering additional changes that further adjust the metadata configuration. These additional changes are tracked with new transaction, session, and SCR identifiers.

With regard to the amount of records impacted by a metadata change, a change can have a nearly limitless scope. Because each insurance product must be developed separately, the very first update performed on a product is to copy all the metadata from an existing product to serve as an initial baseline. Things like the product name are changed, obviously. This common operation is essentially a one-click edit that associates hundreds of thousands of metadata records with a single change operation. Note that a single metadata change or collection of changes does not necessarily constitute a complete module or subprogram. Instead, metadata changes commonly have the same characteristics as software changes: fixing a bug in existing logic, adding new variables to the system, refactoring existing code, and so on.

While the MDS automatically interleaves transactions that are packaged together in a bundle of simultaneously-exported changes, dependencies and relations between the bundles must be dealt with at a higher level. Moreover, these interactions have to be evaluated separately for each target environment: QA may prefer to receive changes in a particular order, but legal and business reasons may affect the order that entire new products are deployed. If a state regulatory agency imposes a 30 day delay, then the planned deployment sequence flies out the window. There are, unfortunately, some global items in the MDS. The regulatory delay scenario is real, and problems caused by deployment of global changes out of order are also real.

The Solutions

At the outset, Ray and RBF's CIO asked for some low-hanging fruit: some items that could be accomplished in minimal time with existing resources that would show significant, immediate return on the effort. For almost every shop, the fastest ROI is going to come from some kind of automation effort, and RBF was no exception. In fact, because of the numerous manual procedures they were following, automation was almost too easy.

With the help of a skilled local VB contractor who was already part of the deployment team, we established a tracking database for bundling/deployment operations, and a Windows GUI to drive it. We then provided the team's customers (development and QA) with a read-only interface to the database, replacing a shared Excel spreadsheet.

This bespoke application, plus some Perl scripting, converted a procedure that took essentially a full day into a straightforward operation that could be performed by a knowledgeable user in an hour or less (usually less). It also significantly reduced the potential for human error by taking the humans out of much of the process.

It did make the whole process less flexible. The old system had so much human involvement that a bogus request for deployment (from human error) could be converted into a useful one by the deployer. With the new system, what is requested is generally what is delivered, so the garbage-in, garbage-out rule applies. But the customers are smart, savvy people, so this isn't much of an issue.

With Tracker being unsupported, and the complex development and operations environment at RBF, we knew that we needed a new tool that offered tight integration between the source code control and issue tracking components, and that provided a powerful mechanism for integrating with external systems. Because RBF has had more than their share of failed acquisitions and shelfware, project and senior management were acutely aware of the need for a clean, successful selection and acquisition process.

We devised an RFP and contacted all of the major CM tool vendors. In addition to vendor responses, we prepared mock responses for the leading open source tools. The RFP process and structure was the synthesis of Ray's long experience responding to large-scale government RFPs and managing other large-scale procurement initiatives, plus Austin's experience with the CM industry. The result was a comprehensive document that addressed all of RBF's immediate and medium-term needs and permitted vendors to respond within the context of their own product paradigms (allowing, for example, both change-set– and version–based vendors to participate). The scoring, a modified market-based approach, provided both objective and subjective feedback, and prevented vendors from ghosting the responses.

We were not as formal as a government procurement initiative (we actually finished our project) but we were thorough. One vendor technical consultant commented privately that while the RFP looked intimidating initially, he found it both comprehensive and very easy to answer.

Vendor self-assessments were merged with internal ratings and subjective analysis to provide an initial ranking. The RFP approach allowed us to swiftly separate out the wheat from the chaff, and objectively counter all “you should have chosen tool X” arguments. Some of the requirements in the RFP were hard, meaning we would not consider a vendor that answered negatively. Some of the vendors were inept, meaning they would not respond, or failed to completely answer the RFP.

We swiftly narrowed the field to seven vendors, then began follow-up calls to clarify technical questions and ensure that everybody on the acquisition team had a chance to comprehend each vendor's solution. Another round of scoring was performed, subjectively evaluating some of the vendor responses. The top two candidates were brought in-house for a proof-of-concept exercise. For this, we imported some source code and a month's worth of change requests, then re-ran a few recent development operations. Our eventual choice was MKS Integrity Suite 2006.

We knew that an enterprise CM solution was not going to be available out of the box. The majority of vendor representatives were good about arranging for technical contacts so that we could work out ways to implement enterprise CM in their systems. Most vendors provide some levels of support for geographically distributed development and complex organizations. Identifying support for the various technologies required balancing platforms and technologies directly supported against the need to build a scripted framework to integrate any unsupported bits.

Similarly, we knew that no vendors offered support for database CM. This is where having an experienced CM specialist at hand paid off. After understanding the problem and applying the Feynman problem-solving algorithm, Austin developed a new approach: Longacre Deployment Management.

Our new CM tool was not enough to resolve the issues faced by the RBF development team. Since the majority of development was done in the MDS, we needed a workable solution to the database CM problem as well. After modeling the flows of different work products—Java, COBOL, and metadata—we separated the status of a change from the record of its installed location.

The eventual solution, named Longacre Deployment Management (LDM), derives from the need to keep track of the deployment of work products to different database instances, and from the fact that those changes, once deployed, cannot be reversed. All other aspects of CM are subordinated to this fact of life. LDM works by accepting the ‘forward only’ nature of deployment for individual updates, and adding a complete develop/test/rework flow using higher level objects.

The fact that RBF's software development incorporates many different technologies developed across several geographically separated offices forced LDM to use a high level of abstraction even for basic operations. Presently, LDM provides a single comprehensive technique for CM that supports almost every facet of complex enterprise development. To keep this article from crossing the line from ‘long’ to ‘gargantuan,’ the technical details of LDM are discussed in a separate article. Curious CMers are invited to read “Technical Introduction to Longacre Deployment Management™,” also available on CM Crossroads.

Conclusion

Our solutions follow Ray's management style: assume that whatever must be done, can be done. Instead of focusing on “how to deploy Java changes” or “how to deploy SQL changes” or “how to deploy COBOL changes,” we assumed that those problems had been solved and went on to build the system at a higher level.

This is not to say that the challenges of managing and deploying the various technologies are trivial. Far from it, but those challenges have all been studied, and solutions exist for each of them. Our approach has been to permit those existing solutions to be used as much as possible, and to concentrate on building our solution based on existing, standard approaches. The value we have added comes from identifying similarities among commonplace activities and events, and abstracting those essentially-similar activities as the basis for a flexible system.

The elapsed time for the project was more than a year. In that time, Ray was able to organize the MDS adoption project into a functioning team, and Austin was able to establish consistent, repeatable procedures for software development. From zero to a stable, productive team delivering a new product every couple of weeks is a radical leap.

A big part of that leap derived from the metadata system itself. The MDS, when working as intended, gave a small team a lot of force. The MDS, when properly managed using sound CM principles, turned a lot of force into a lot of actual work. When all the different teams were in the trenches together and working in harmony, their output was (and still is) impressive.

Consider this, first, a demonstration that it is possible to apply traditional CM principles, using existing, off-the-shelf CM tools, to enterprise systems (including databases). LDM, described elsewhere, is a viable, functioning solution for integrating CM of database structure and content with CM of other, traditional software technologies. A high level of abstraction let us build a solution that deals nicely with radically different technologies, with different organizational cultures, and with a geographically distributed team.

To a lesser extent, this is an encouragement for current CM professionals and R&D style software workers to investigate the ideas and products coming from the ITIL market space. While some ITIL vendors are still focused on reporting which version of Windows is installed on every user's laptop, the existing CM vendors are making considerable strides into ITIL. Some of their implementation ideas are quite good.

The enterprise philosophy of taking for granted well-understood development practices and incorporating them into a higher-level abstraction is compatible with and well suited for ‘IT style’ (as contrasted with R and D style) development, where a lot of technologies are contributing to a business operation. Our traceability implementation connects work items directly to the server(s) they are deployed on, and lets the users interpret the quality or acceptability of the changes on their own. But we believe that this approach is valid for all styles of development, and it represents a substantial step forward in CM for complex systems.

Automation and abstraction were the foundation of our new technique. A modern commercial tool enabled us to implement that technique quickly and effectively. The new technique itself came directly from looking at the development process as a system to be refactored. Each of these steps in turn provided a direct, tangible benefit. Together, they helped solve two big CM problems.