The more critical a system is, the more highly available that system needs to be. However, it is very difficult—if not impossible—to measure every way a system can fail or to predict how long it will take to recover. But don’t worry! There are still many test strategies you can employ to understand your system’s failures, reduce downtime, and increase availability.

Software doesn’t need to work just once; it needs to work all the time, or near close to it. That has put availability right in the center of software discussions since the first payroll job was processed in the 1950s. The more critical a system is to the company running it, the more highly available that system needs to be. In the modern e-commerce world, downtime of even a few seconds can cost a company millions of dollars in revenue.

Early on, companies trying to solve the availability problem turned to costly solutions such as multiple redundant computers, each with multiple power feeds, network feeds, and arrays of disks. This ensured that any single failure in one of the servers could not bring the entire system down. These servers were installed at geographically distant data centers so that if one data center went down, the other would remain up and running. These tactics are still in use in modern data center design, and the industry continues to evolve to include applications in the cloud, containerization, autoscaling, and distributed database management systems. All of these new techniques separate the application from the underlying hardware while adding an extra layer of recoverability for when hardware does inevitably fail.

While the industry has done much to improve the ability of systems to recover from failures, the concept of availability is still very much misunderstood; availability requirements are often hand-waving or cliche, hard to measure, and generally ignored. Vague requirements are useful only to the extent that they convey to the tester the need to perform some sort of testing to compare the system to a seemingly random availability percentage. And while creating useful availability requirements is difficult to do, measuring actual availability of your system prior to a production release is even more difficult, if not outright impossible. In my experience, this is because there are simply too many potential factors to measure during a testing phase. The best we can do is attempt to simulate the various ways a system can fail and measure its ability to recover from each failure type. It is probably more accurate to call this level of testing recoverability testing rather than availability testing.

Understanding Recoverability and Availability

To understand the difference between recoverability and availability more fully, we must first look at the general formula of system availability:

Availability = mean time between failures / (mean time between failures + mean time to recover)

This formula is often shortened to

Availability = MTBF / (MTBF + MTTR)

So, when we say “recovery,” what we are really talking about is the MTTR—or, more specifically, measuring and reducing the MTTR. Availability, on the other hand, accounts for the entire equation, including the time between failures as well as the recovery time when a failure occurs. Remembering our grade school mathematics, the two ways to increase the quotient—in this case, our availability measurement—are to either increase the numerator or decrease the denominator. In this sense, this formula shows that if we want to increase our system’s availability, we need to either increase our MTBF by reducing the number of ways our system can be made to fail, or reduce the MTTR by making our system capable of recovering quickly. Doing both would be especially helpful in increasing our availability.

Achieving higher availability means having a system that can go longer without failing (increasing the MTBF), and, when it does fail, can be brought back to a working state quickly (decreasing the MTTR). Measuring, reporting, and attempting to reduce the MTTR is the essence of recoverability testing, and it is impossible to predict a system’s availability without understanding how a system recovers when it fails.

Testing for Availability

There are two big problems with trying to directly test for availability before initial release. The first is that it is impractical to test every way a system can fail. Software systems are multilayered and extraordinarily complex, and it is impossible to predict or simulate every way a system can fail. However, formal processes such as failure mode and effects analysis (FMEA) and fault-tree analysis (FTA) can be used to understand many of the ways a system might fail, and can help the team prioritize those failures, either by the most likely to occur or the most likely to be impactful.

If your organization is not quite ready for a fully formalized FMEA or FTA exercise, your QA team can still figure out the more likely and impactful types of failures to test by asking a couple of key questions of your developers and architects:

- What are some of the ways you would expect our system to fail?

- What happens if one of our servers loses power? Is another one supposed to take over?

- What is supposed to happen if the network fails on one of our machines? How is our application supposed to respond?

- What happens when the database goes down?

- Do we have to reboot our application machines when we deploy a new version of the code to production?

There are many, many more questions that can be asked about how the system can fail, but the key here is to get the conversation going and encourage the team to think in terms of failures and how to develop the system to handle failures gracefully. From a tester’s perspective, these questions allow our testers to focus not on how much availability the system can provide, but on how many ways we, as testers, can create downtime in the system.

The second difficulty in attempting to test a system’s availability is that the mean time to recover component of the equation often contains human and process factors that are difficult to predict prior to the system going into production. For example, if a production database goes down and requires a database restart to bring the system back to a functional state, the time to fix the issue (the MTTR) can be anything from a few seconds if the recovery is automatic, to a few minutes if an on-site administrator needs to perform the restart and is alerted quickly, to several hours if the administrator is home in bed and has to respond to an alert on his or her cell phone.

In order to accurately predict our organization’s responsiveness to a failure situation, we make our system fail at the worst possible time under the same circumstances we expect in production. This may include failing our test system in the middle of the night, alerting our overnight support team, and having the administrator fix the issue as if it were a true production emergency happening at 3 a.m. For most organizations not supporting life-or-death systems, this level of testing simply doesn’t happen, so we’ve already made it impossible to measure availability before we move to production. Our release and deployment process can also directly impact the availability of our system if it is unpredictable (nonrepeatable) or if the system has to be brought down every time we release.

The point here is that there are factors in play that are not directly testable in a preproduction environment without significant cost and effort, which companies are often not willing or able to support prior to a production release. These activities usually fall more under the category of production readiness rather than testing and are not often (but can be) driven by the testing team. However, as a member of the project team, if you hear conversations occurring around “high availability” without also hearing about production readiness, it may mean that you have an opportunity to help your team better understand how downtime and availability are directly related.

Reducing Downtime and Increasing Availability

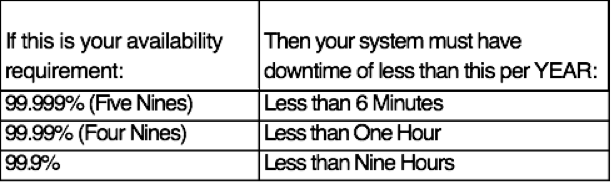

Many individuals are unaware of how impactful just a few minutes of downtime can be to a system’s availability.

As you can see, the amount of downtime a system can incur goes down significantly as your availability requirement goes up. The higher the availability target, the more aggressive the team must be in detecting possible causes of system failure and either eliminating them or building in redundancy or automatic recoverability.

More and more, software systems are being developed to support some level of automatic recovery. The intention is to reduce recovery time to only a few seconds, or, potentially, down to zero. If high availability is important and automatic recovery functions are built into the system, then it is imperative that someone test these functions.

Start Failing Constantly

If we can’t measure every way our system can fail and we can’t precisely predict what our time to recover will be in every situation, is all hope lost? Of course not! In fact, the test team can directly contribute to increasing the MTBF of the system and, in some cases, decreasing the MTTR. Either of these contributions can have a direct impact to the system’s ability to be highly available. These two types of testing can be divided into failure and recoverability testing, and they are often (but don’t have to be) performed together.

- Failure testing has a goal of increasing MTBF by reducing the number of ways a system can be made to fail

- Recoverability testing has a goal of reducing MTTR by reducing the time it takes a failing system to recover

As is true with functional testing, it is quite possible and, in fact, desirable to automate as much of your failure and recoverability testing as possible. While you can test certain types of failures, such as network or power outages, by simply pulling cables in your test lab, automation adds a level of repeatability to the process that becomes extremely important in the long term. Automating these tests is the difference between testing one time versus testing against every build and every release.

The mantra here, borrowed from Netflix and the lessons the company learned around failure testing, is “The best way to avoid failure is to fail constantly.” The key is not necessarily to go from never testing failures to always testing failures, but to move toward that model in steps that your organization can undertake.

If you are doing nothing, do something. Even a test as simple as pulling the network cable and seeing how your application responds is a first step toward understanding the failure and recovery characteristics of your system.