Performance in a Web application is of utmost importance. This paper discusses the various types of testing that should be performed while measuring the performance of a Web application, the different workload models, and the ways of measuring response times using automated tools. A good performance tool aids the testing process and can be the smartest way of measuring performance in a project. End-to-end performance is the last stage of the project in a typical Web development iteration cycle. This paper also talks about some crucial design factors that improve the performance of a system.

What Is the Difference between Load and Stress Testing?

A load test is a simulation of real-life use of a Web application, performed to assess how the application will work under actual conditions of use. The test is performed under expected conditions both prior to application deployment and during the life cycle of the application. A stress test is designed to determine how heavy a load the Web application can handle. A huge load is generated as quickly as possible to stress the application to its limit. To guarantee a realistic simulation of the workload created by actual users, the test environment must be as close to the real environment as possible. A good load test should generate different types of traffic expected, using actual computer software and hardware.

What Is a Workload?

A workload is the total burden of activity placed on the Web application to be tested. This burden consists of a certain number of virtual users who process a defined set of transactions in a specified time period. Assigning a proper workload is at the crux of performance testing.

Typical Workload Models:

- A steady state workload is the simplest workload model used in load testing. A constant number of virtual users are run against the Web application for the duration of the test.

- An increasing workload model helps testers to find the limit of a Web application's work capacity. At the beginning of the load test, only a small number of virtual users are run. Virtual users are then added to the workload step by step.

- In a dynamic workload model, you can change the number of virtual users in the test while it is being run and no simulation time is fixed.

- In a queuing workload model, transactions are scheduled following a prescribed arrival rate. This rate is a random value based on an average interval calculated from the simulation time and the number of transactions specified.

Understanding the Architecture and Test Environment

Before choosing the right model for performance tests, it is important for us to understand the architecture. Testing in an environment that does not mimic production provides wrong results. It's crucial to know the specifications of the Web server, databases, or any other external dependencies the application might have. Building the test environment as close to production as possible makes the testing effort a lot easier and is the key to providing accurate results.

Writing Automated Scripts

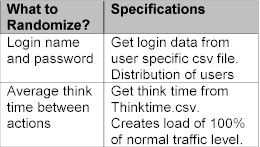

In any tool, record and playback is a common feature. But automated testers should realize that recording and playing back the Web traffic that you recorded from the application won't help you simulate the workload. Once have your basic recorded script, you have to: parameterize the script by declaring variables and creating data files associated with the script; call functions to parse through the data files; store the values; and input them to the script. A good example to consider would be to simulate multiple logins in an application. In this case you might want to store the user names and passwords in a comma-separated value file or some other format. It's also a good idea to randomize certain data wherever possible to ensure complete coverage of data. You can also add think times to the script while performing a stress test. Verifying the headers and HTML or XML content returned from the server involves writing additional functions in the automated script. Once the basic framework for the script is complete, the next step would be to structure the script based on the scenarios that you want to include in the load or stress tests.

Typical Randomization Factors

Structure and Format the Automated Script

Depending on the mix of transactions that you want to include in the automated script, you can create different types of virtual users by defining user types within the automated script. Once you have designed the user types and the actions that each user type should perform, you are pretty much ready to run the script. This enables you to incorporate these user types while running different workload models, giving you the capability to add different flavors to your tests. Keeping all functions and methods together in a separate file makes the automated script shorter and easy to read and maintain.

Baselining and Benchmarking the Tests

A benchmark test is basically conducted to profile performance for different software releases. Benchmarking helps you to run tests under similar conditions in order to compare results for different software, to verify that new features and functions do not impact response times of the Web application under test, and to find system bottlenecks. Defining what each virtual user does and measuring response times for a single virtual user performing a certain transaction is what baselining is all about. Once you have data for a single user, you can then ramp up the number of users linearly to "n" users and measure the response times to look for deviations.

Determining the Capacity of the System

In our case, once we had the baseline test in place, we increased the number of virtual users to find the system limit. We encountered a good number of errors when we ramped up to about ten users. Typical errors were socket errors, access denied, socket connection refused, etc., depending on the configuration of the Web server. This may happen if a simulated version of software is used or any of the dependencies for the application are not available at the time of performing the test. This may pose a serious problem, because the response times would not be an exact replication of the production environment. Under these circumstances we should know when to stop. Once we know the threshold number of virtual users the Web server can handle, we can specify our workload scenarios for our load and stress tests.

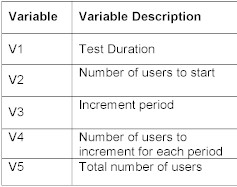

Designing Test Variables

Test variables can be a good way of altering the workload by controlling these variables. These variables will depend on the maturity of the software that's being tested. The variables are named Vx (e.g. V1, V2, etc).

What to Measure?

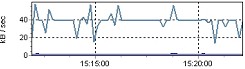

Most performance tools measure round-trip response times. "Round trip" can be defined as the time taken between the request being sent from the client and the response received from the server. Round-trip time includes the network time that is not a constant depending on the environment in which the test is conducted, the external dependencies on databases, and network latency. Hence, round-trip response times are not an accurate indicator of the performance of the application. You should measure the server-side response times from the server logs and compare them with the round-trip times to chart how the response times vary depending on the network traffic during different times of the day. It might also be a good idea to run an all-day workload and get measurement of response times at sample intervals of time to get an average number. In our case, we also measured parameters like average number of transactions/sec, hits/sec, throughput, etc., as shown in Graphs A, B, and C.

Graph A

Graph B

Graph C

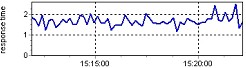

Reporting Performance Numbers

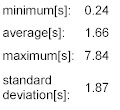

Performance numbers are typically reported as average, minimum, and maximum response times. If the performance test runs for a specified time, the average response time would be the most important indicator of performance. Maximum and minimum response times provide a means to investigate why a certain transaction with a certain input indicated a good or bad response time. Graph D helped us in analyzing response times.

Graph D

Monitoring the Web Server

Monitoring the Web server is an integral part of performance engineering. While running different workloads with multiple users, we always logged performance counters like processor time, private bytes, thread count, handle count, etc. In most cases, our hardware/processor was not able to handle the load and the CPU time exceeded 100%; hence, the results were not accurate. It's almost imperative that performance counters be logged and analyzed. This can prevent unpleasant situations when the application is being tested in the production environment, and you also get an estimate of how many virtual users your environment is able to handle during your performance integration tests.

Conclusion

End-to-end testing of performance in Web applications is a complex and time-consuming task. The proposed methodical approach in this paper not only ensures a structured way of testing, but also provides a practical way of enhancing performance of a Web application by applying these techniques at every phase or iteration in a Web development life cycle.

References

- "Designing Performance into Your Web-Based Applications," IBM Performance Management and Capacity Planning Services

- Dr B.M Subraya and S.V Subrahmanya, "Object-Oriented Performance Testing of Web Applications," IEEE

- "Boosting App Server Performance," Application Development Trends, Vol 7, No II

- Andreas Rudolf and Raniner Pirker, "E-Business Testing: User Perceptions and Performance Issues," IEEE 0-7695-0825-1/00

- Mike Hagen, "Performance Testing E-Commerce Web Systems," presentation paper, Vanguard group, 5/3/2002

- "Load Testing for E-Confidence," Segue Software

- Daniel. A. Menasce, "Scaling the Web," George Mason University