One of the Agile principles is simplicity—the art of maximizing the amount of work not done is essential. Kubernetes provides simplicity through different features based on the Single Responsibility Principle for decoupling, and through automating tasks such scaling, resource allocation, and making updates.

Kubernetes is the leading container orchestration platform. Agile software development sets some principles such as user collaboration, responding to change quickly, working software over short timescales, and tunability. Software development that makes use of Kubernetes applies several of these principles.

Individuals and Interactions

Even though Kubernetes is an automation and orchestration framework, it involves collaboration among individuals at various stages including:

- Choosing a host cloud platform

- Choosing availability zones: Kubernetes is a distributed framework and the availability zones must be selected through collaboration among individuals

- Choosing an Application platform: Kubernetes could be run as a standalone, but users need to decide if an application platform such as Openshift should be used. OpenShift, with an embedded Kubernetes cluster manager, is an open source platform providing full application life-cycle management

Working Software

Working software is a top priority with Kubernetes. Kubernetes is based on a decoupled, microservices based architecture so that no single point of failure disrupts the functionality of the software. Upgrades and updates can be made without any significant downtime. High availability and fault tolerance are built into a distributed Kubernetes cluster.

Customer Collaboration

A customer is involved in the various aspects of a Kubernetes cluster. Some examples of user involvement are discussed next.

A user may set scheduling by using a scheduling policy involving predicates and priority functions. Scheduling could be used to filter out nodes using filtering policy predicates. A user may want to filter out nodes that do not meet certain requirements of a Pod. A user could use priority functions to rank nodes. Node affinity may be set using node labels and annotations to match Pods with nodes. A Pod is scheduled onto the node with the highest priority.

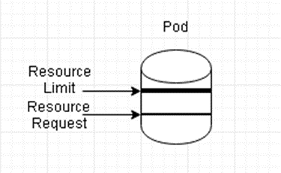

A user may set resource usage. While a Kubernetes node capacity is fixed in terms of allocatable resources (CPU and memory), Kubernetes offers some flexibility in resource consumption by the different Pods running on a node. A Pod has some minimum fixed requirements for resources (CPU and memory) with provision for some flexibility for a Pod to be able to use more the minimum requested resources if available. Kubernetes has a flexible resource usage design pattern based on “requests” and “limits” as shown in Figure 1. A “request” is the minimum resource a container in a Pod requests. A “limit” is the maximum resource that could be allocated to a container.

Figure 1. Resource request and limit

Responding to Change

Being able to respond to change over following a fixed plan is one of the Agile principles. Kubernetes is designed on a flexible agile architecture that responds to change quickly, an example of which is discussed next.

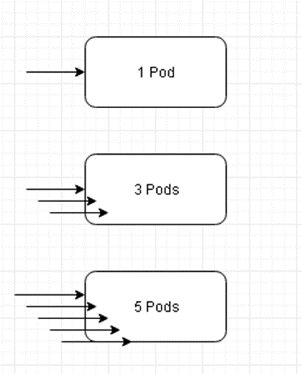

The load in a Kubernetes cluster does not follow a fixed configuration setting and varies depending on application requirements; cluster load could increase or decrease. The Kubernetes cluster manager is able to scale a cluster of containers (Pods) as required. If more load is expected, a cluster scales up (increases the number of Pods). If less load is expected, a cluster scales down (decreases the number of Pods). In a production level cluster in which the load is not predictable and high availability is a requirement, the autoscaling management becomes imperative. Autoscaling makes use of a horizontal Pod scaler that has a pre-configured minimum and maximum number of Pods within which to scale a cluster. When load is increased on a running cluster, the horizontal Pod scaler automatically increases the number of Pods in proportion to the load requirements up to the configured maximum number of Pods as shown in Figure 2.

Figure 2 Increasing the Load increases the # of Pods

Early and Continuous Delivery of Valuable Software

Continuous delivery of software is central to Agile principles. Kubernetes-based software does not incur a significant downtime, as illustrated by one of the Kubernetes features below.

One of the Agile software development principles is Continuous Deployment. The objective of Continuous Deployment is to minimize the lead time between a new upgraded version of a software and the version currently being used in production.

If the Docker image specification or the Kubernetes controller specification were to change, a running application should not need to be shut down to deploy the new image or controller configuration. Shutting down an application would cause the application to become unavailable.

Kubernetes provides rolling updates to make software updates and upgrades to running software. During a rolling update, running Pods are terminated and new Pods for the new software are started without a significant downtime. The running Pods are shut down using a “graceful termination” mechanism, which implies that the in-memory state of the container is persisted and the open connections are closed.

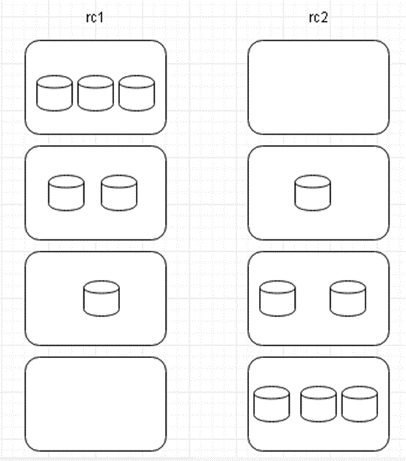

As illustrated in Figure 3 for rolling update of a replication controller rc1 to rc2, rc1 initially has 3 Pods and rc2 0 Pods. In the next stage, rc1 has 2 Pods and rc2 has 1 Pod. In the subsequent stage, rc1 has 1 Pod and rc2 has 2 Pods. When the rolling update is completed, rc1 has 0 Pods and rc2 has 3 Pods. The rolling update is performed 1 Pod at a time.

Figure 3 Rolling Update

One of the requirements of a rolling update is for multiple controllers to be associated with a service, at least for a short while during which a running controller is deleted (a Pod at a time) and Pods for a new controller are started. In a rolling update, the controller/service are decoupled, which follows the Single Responsibility Principle. If the controller/service was tightly coupled, multiple controllers could be associated with a single service as new controllers are created and running controllers removed.

Welcome Changing Requirements

Kubernetes supports changing requirements not just for an unpredictable, changing load or rolling updates, but also in routine configuration.

Consider that some environment variables such as username and password are to be used in multiple Pod definition files. One approach could be to configure the username and password in each of the definition files, which is not only tedious to start with but also if the username and password were to change, all the definition files would need to be updated as well. Kubernetes supports ConfigMap, which is a map of configuration properties that could be used in definition files for Pods, Replication Controllers, and other Kubernetes objects to configure environment variables, command arguments, configuration files such as key-value pairs in volumes. A single ConfigMap may specify multiple configuration properties as key/value pairs. ConfigMaps decouple the containers from the configuration, providing portability of the applications.

Decoupling is central to how changing requirements are met. As another example, storing data associated with a container/Pod in a decoupled volume is how Kubernetes supports changing requirements. If data were made integral to a Docker container then the data is not persistent because the data is removed when a Docker container is shutdown. Secondly, the data is container-specific and cannot be shared with other containers. Kubernetes volumes regard the Single Responsibility Principle (SRP) by decoupling the data from a container. A volume is just a directory with or without data on some medium which is different for different volume types. A volume is specified in a Pod’s spec and shared across different containers in the Pod.

Kubernetes meets changing resource requirements that could vary from one development team to another using resource quotas. Resource requirements could not only vary among different teams, but also vary across the different phases of application development; application development could have different resource usage than application testing and an application in production.

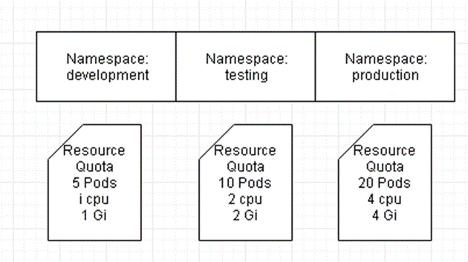

Kubernetes supports elastic quotas that are not completely elastic, but a fixed upper limit that is flexible to some extent based on the scope. Resource quotas are specifications for limiting the use of certain resources in a particular namespace; the quota is for the aggregate use within a namespace. The objective of resource quotas is to leave no development team behind. A fair share of resources is provided to different teams with each team assigned a namespace with quotas. A secondary use of quotas could be to create a different namespace for production, development and testing based on the fact that different phases of application development could have different resource requirements. Quotas could be set on compute resources (cpu and memory) and object counts (such as Pods, replication controllers, services, load balancers, and configmaps to list a few). Resource quotas for different development teams are illustrated in Figure 4.

Figure 4 Different ResourceQuotas for different Namespaces

One of the Agile principles is simplicity—the art of maximizing the amount of work not done is essential. Kubernetes provides simplicity through different features based on the Single Responsibility Principle for decoupling, and through automating tasks such scaling, resource allocation, and making updates.

User Comments

This article is very insightful and informative for anyone who is interested in how Kubernetes supports Agile development. I agree with the author that Kubernetes is a simple, flexible, and powerful language that can enhance the software development process and deliver valuable, working software. Another important argument is that Kubernetes automates many tasks that are essential for Agile development, such as scaling, resource allocation, and deployment