Testing professionals who are learning about agile often want to know how they can provide traceability among automated tests, features, and bugs and report on their testing progress. Here, Lisa Crispin gives an example of how her previous team worked together to integrate testing with coding and helped everyone see testing progress at a glance.

Testing professionals who are learning about agile often want to know how they can provide traceability among automated tests, features, and bugs and report on their testing progress. They’ve never worked on a team that guides development with examples and tests, and they want to know how to manage their manual and automated test cases.

When I get these questions, I sometimes try to explain how testing in agile is inherently traceable. When we start developing each story by writing tests, by the time a story is done, it will be adequately covered by automated regression tests at various levels. In addition, a wide range of testing activities—including exploratory testing; other business-facing tests that critique the product, such as usability testing; and technology-facing tests, such as performance and security—will have been completed.

I find it helps agile newbies more if I illustrate this idea by explaining how my own teams have made testing progress visible and kept track of what tests cover which features. Over the years, testers, programmers, and other generalizing specialists on my teams have succeeded in guiding development with executable tests and completing other testing activities by collaborating.

Here's an example of how my previous team worked together to integrate testing with coding and helped everyone see testing progress at a glance.

Planning Our Testing

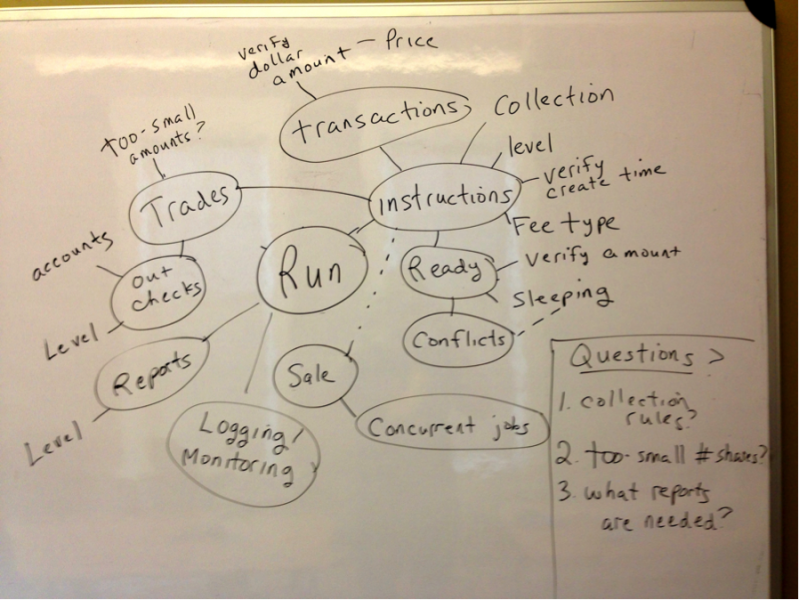

When our small team (four programmers, three testers, one or two database experts, and one or two system administrators) started on a new epic or big feature, we testers would mind-map test cases and the different types of testing we'd need to do. See Figure 1 for an example of a mind map my team used to plan testing for a theme that was a major rewrite of batch processing of mutual fund trades. We'd go over the mind map with the other team members and product owner, and often other business stakeholders as well. We often did the mind map on a big whiteboard in the team’s work area so everyone could see it and we could easily discuss it whenever we had questions.

We created a wiki page for each feature, organized by business functionality. Pictures of the mind maps went here, plus high-level test cases for each story. We also put exploratory testing charters. For big features with lots of stories, we'd have a wiki page for each story.

Testing and Coding

We used FitNesse to write acceptance tests at the API level that helped guide coding. When a programmer started work on a story, a tester would write a “happy path” FitNesse test illustrating an example of desired behavior for that story. As soon as the programmer got that test passing, the tester would start adding test cases, getting into boundary conditions and unhappy paths, and so on, and collaborate with the programmer to get all the tests passing. We often explored the feature via the FitNesse tests, putting different data inputs through the new production code. We didn't keep all of these as regression tests—just the ones needed to provide enough coverage.

Continuous Integration

The FitNesse tests were checked into source control and tagged together with the production code so we always knew which version of the tests went with which version of the code. They were run as part of our continuous integration. We had a plug-in for Jenkins that let us see the FitNesse test results in the build, just like the JUnit test results, which provided a good history over time that could be understood by everyone on the team, even the business experts.

Dealing with Defects

If bugs were identified during or after coding, we wrote a test to reproduce the bug (or the programmer would do this at the unit level, if possible). The programmer fixed the production code, got the test green, and checked in both the test and the production code, so we had a record of what test went with this defect fix in our version control.

Many Types of Testing

We kept our stories small to help ensure a steady velocity and minimize work in process. As stories were delivered, we'd do manual exploratory testing using the charters or whatever we felt was appropriate, following our instincts. We'd do other types of testing as needed, such as security or reliability. We demoed early and often to customers and refined our tests and the feature according to their feedback. As we found problems, we showed them to the programmers, who usually were able to fix them right away.

We recorded our testing progress on our testing mind maps, including screenshots, and if we had bugs in our defect tracking system, we noted those bug numbers. Other types of testing, such as performance or reliability, would be planned in at appropriate times. For example, the programmers would do a spike to test scalability up front before the production code and tests were written.

Visibility

Between our story board, our continuous integration results, our wiki, and our mind maps, everyone, including the business folks, could see our progress at a glance. If we judged a particular area or story as high-risk, we could plan more extensive testing on it. Our process gave us confidence that we were delivering high-quality software. Areas that might be weak or inadequately tested were easier to spot.

I urge each software delivery team to get everyone together to brainstorm ways to ensure that each feature is supported with an adequate range of testing activities and a minimum of automated regression tests. Working toward these goals as a team increases your chances of providing effective automation, as well as the traceability and reporting with respect to testing and quality that you desire.

User Comments

Nice and well drafted article...

I love the mindmap approach for generating test ideas! Thx Lisa!!